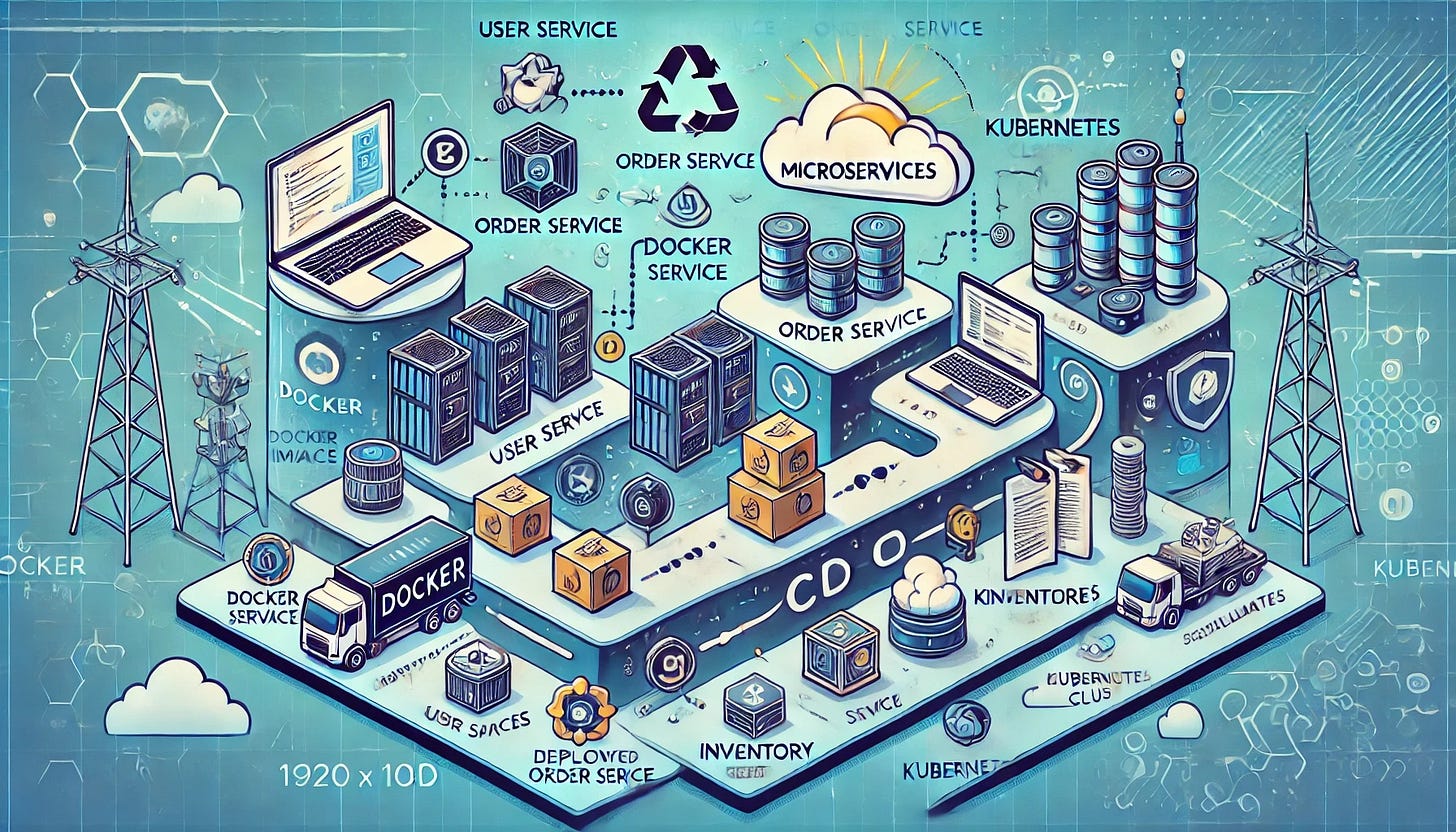

CI/CD Pipeline for Microservices with Docker and Kubernetes

Building, testing, and deploying microservices can be challenging due to the complexities of managing multiple services, dependencies, and deployment environments. However, with the right CI/CD pipeline in place, you can streamline these processes and ensure reliable and efficient delivery of your applications. In this tutorial, we will create a complete CI/CD pipeline for a microservices architecture using Docker and Kubernetes. The pipeline will automate the process of building Docker images, running tests, and deploying services to a Kubernetes cluster. By the end of this tutorial, you will have a robust workflow that not only simplifies the management of multiple microservices but also enhances deployment speed, reliability, and scalability. This project is ideal for developers and DevOps engineers looking to gain hands-on experience with modern CI/CD practices for containerized microservices.

Project Setup and Microservices Architecture

First, we will set the foundation for the project by defining the microservices architecture, containerizing the services, and preparing the project for a CI/CD pipeline. This includes setting up the repository structure, writing Dockerfiles for each service, and testing locally with Docker Compose.

Define Microservices

Microservices are small, independently deployable services that work together to form a complete application. For this tutorial, we’ll create three example services to represent a typical system:

User Service: Manages user information and authentication.

Order Service: Handles orders placed by users.

Inventory Service: Manages the stock of products.

Each service will be a standalone application with its own logic, dependencies, and database. For simplicity, we’ll use lightweight APIs with basic functionality written in your preferred programming language (e.g., Python Flask, Node.js Express, or Go).

Set Up Repository Structure

Organize the project repository so it’s easy to maintain multiple microservices and their associated configurations. A common structure might look like this:

/microservices-ci-cd

├── user-service/

│ ├── app/ # Application code

│ ├── Dockerfile # Dockerfile for containerizing the service

│ ├── requirements.txt / package.json / go.mod

│ └── tests/ # Unit tests for the service

├── order-service/

├── inventory-service/

├── docker-compose.yml # Local testing of all services

├── kubernetes-manifests/ # Kubernetes YAML files for deployments and services

└── Jenkinsfile / GitHub Actions YAML / GitLab CI YAMLThis structure keeps each service self-contained while centralizing configurations for CI/CD and Kubernetes.

Write Dockerfile for Each Service

Each microservice needs a Dockerfile to define how it will be containerized. Here’s an example Dockerfile for the User Service written in Node.js:

# Use the official Node.js image as the base image

FROM node:16

# Set the working directory inside the container

WORKDIR /app

# Copy the package.json and package-lock.json for dependency installation

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the application code

COPY . .

# Expose the application port

EXPOSE 8080

# Run the application

CMD ["npm", "start"]For a Python service, the Dockerfile might look like this:

# Use the official Python image as the base image

FROM python:3.9

# Set the working directory

WORKDIR /app

# Copy the requirements file and install dependencies

COPY requirements.txt .

RUN pip install -r requirements.txt

# Copy the application code

COPY . .

# Expose the application port

EXPOSE 5000

# Run the application

CMD ["python", "app.py"]Repeat this process for all microservices, ensuring each one has a Dockerfile that specifies its dependencies and entry point.

Test Locally with Docker Compose

To verify that the microservices work together, create a docker-compose.yml file that defines how the services will interact in a local environment. This allows you to simulate the full application without deploying to Kubernetes yet.

Here’s an example docker-compose.yml file for all three services:

version: '3.8'

services:

user-service:

build: ./user-service

ports:

- "8081:8080"

environment:

- NODE_ENV=development

depends_on:

- db

order-service:

build: ./order-service

ports:

- "8082:8080"

depends_on:

- db

inventory-service:

build: ./inventory-service

ports:

- "8083:8080"

depends_on:

- db

db:

image: postgres:13

environment:

POSTGRES_USER: user

POSTGRES_PASSWORD: password

POSTGRES_DB: microservices_db

ports:

- "5432:5432"Run the following command to bring up the services:

docker-compose up --buildVisit http://localhost:8081, http://localhost:8082, and http://localhost:8083 in your browser to test the APIs.

Push to Git Repository

Once your services are working locally, push the code to a remote Git repository for version control and integration with a CI/CD tool. If you’re using GitHub, follow these steps:

Initialize a Git repository:

git init

git add .

git commit -m "Initial commit"Create a remote repository on GitHub. Link the remote repository:

git remote add origin <your-repo-url>

git branch -M main

git push -u origin mainYour repository is now ready for CI/CD integration.

Next, we will set up the Continuous Integration (CI) process. This involves automating the build and test phases for each microservice to ensure the code is always production-ready before deployment.